In this article you will learn different types of validity in research in detail.

In social science research, validity is the degree to which a study measures what it intends to measure. Different types of validity exist and when designing a study, researchers need to take into account which types of validity are most important for their particular goals.

When conducting research, it is important to consider the different types of validity in order to ensure that the results are accurate. Each type of validity has different implications for the research being conducted. It is important to consider all types of validity when designing and conducting research.

Validity

In terms of measuring methods, validity refers to an instrument’s capacity to measure what it is intended to measure. Validity is defined as the extent to which the researcher measured what he intended to measure.

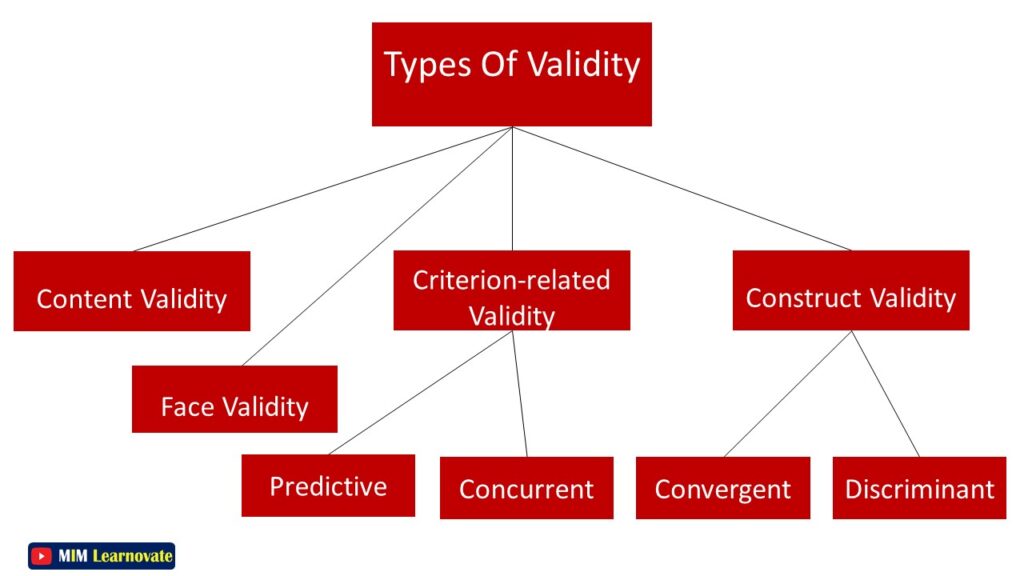

There are four types of validity in research

- Content Validity

- Criterion-related Validity

- Construct Validity

- Face Validity

Content Validity

The content validity of the measure ensures that it has an adequate and representative selection of items that tap the concept. Content validity is the extent to which a measure accurately captures the construct it is meant to measure. The stronger the content validity, the more the scale items represent the domain or universe of the concept being measured.

A panel of experts can testify to the instrument’s content validity.

✔ According to Kidder and Judd (1986), a test meant to quantify degrees of speech impairment can be regarded valid if it is so appraised by a group of experts (i.e., professional speech therapists).

It is important for content validity to be high in order for a measure to be useful.

There are several ways to assess content validity, including

- Expert judgment

- Content analysis

Expert judgment

Expert judgment involves having experts in the field review the items on a measure and make judgments about whether or not they are appropriate for assessing the construct of interest.

Content analysis

Content analysis involves analyzing the items on a measure to see if they cover all aspects of the construct of interest.

Both expert judgment and content analysis are important methods for assessing content validity.

However, content validity is ultimately determined by how well a measure predicts outcomes of interest. If a measure has good predictive validity, then it can be said to have good content validity.

Content validity is important because it is one way to determine whether a test is measuring what it is supposed to be measuring. If a test has good content validity, then we can have more confidence that the results of the test are accurate and reliable.

Example

A math instructor creates an end-of-semester calculus test for her students. The exam should cover every type of calculus presented in class. If specific types of algebra are omitted, the results may not accurately reflect students’ understanding of the topic. Similarly, if she includes non-calculus questions, the findings are no longer a valid test of calculus knowledge.

Face Validity

Face validity suggests that the items intended to test a concept appear to measure the idea on the surface. It refers to the degree to which a test appears to measure what it is supposed to measure.

Face validity is not an accurate predictor of a test’s actual psychometric properties, but it is important for determining whether a test will be accepted by those who will be taking it. If a test has poor face validity, test-takers may resist taking the test or may not take it seriously, which can lead to invalid results.

There are several ways to assess face validity.

✔ ask experts in the field whether they believe the test measures what it is supposed to measure.

✔ask potential test-takers whether they believe the test is a good measure of the desired construct.

Example

You design a questionnaire to evaluate the consistency of people’s food habits. You go over the items in the questionnaire, which include questions regarding every meal of the day as well as snacks taken in between for every day of the week. On the surface, the survey appears to be a solid depiction of what you want to test, so you give it a high face validity validity.

Another example of face validity would be a personality test that included items that assessed whether the respondent was outgoing, shy, etc.

Criterion-related Validity

When a measure differentiates individuals on a criterion that it is anticipated to predict, criterion-related validity is established. Criterion-related validity is a type of validity that demonstrates how well a measure correlates with an established criterion. It is concerned with the relationship between a measure and some external criterion, such as a performance on another test or a real-world behavior.

This type of validity is important when choosing a measure to use for decision making, because it can help to ensure that the results of the measure are accurate.

Example

If you want to predict how well someone will do on a test, you would want to use a measure with good criterion-related validity.

If you want to know whether or not a new math test can predict how well students will do on the state math test, you would look at the criterion-related validity of that new math test.

There are two types of criterion-related validity,

- Predictive validity

- Concurrent validity

Predictive Validity

Predictive validity is concerned with the ability of a measure to predict future performance on some criterion. It assesses the ability of a measure to predict future results. It is a type of validity that is used to assess whether or not a predictor variable is able to accurately predict an outcome variable.

Predictive validity refers to a measuring instrument’s ability to distinguish between persons in relation to a future criterion. It is usually expressed as a correlation coefficient.

✔ A high predictive validity means that the measure can accurately predict future performance

✔ A low predictive validity means that the measure is not a good predictor of future performance.

Predictive validity is important for choosing measures that will be useful for predicting future performance.

Example:

If you are interested in predicting how well students will do on a test, you would want to choose a measure with high predictive validity for that test. However, if you are interested in predicting how well students will do in school overall, you would want to choose a measure with high predictive validity for school overall.

If you were interested in predicting whether or not someone would get a job offer based on their interview performance, you would want to have strong predictive validity. In order for predictive validity to be strong, the relationship between the predictor and outcome variables must be linear and free from error. Additionally, the predictor variable must be able to explain a significant portion of the variance in the outcome variable.

Concurrent Validity

Concurrent validity is concerned with the relationship between a measure and some other measure that is being used as a criterion at the same time. This validity assesses the ability of a measure to predict results that have already been established,

Concurrent validity is established when the scale discriminates across persons who are known to be different, implying that they should score differently on the instrument. It is the extent to which a measure predicts the same construct at the same time. Concurrent validity can be established through correlations between measures, or by using known groups comparisons.

Concurrent validity is an important tool for researchers to understand how well a new measure correlates with an existing measure.

Example

A new intelligence test might be given to a group of students who have already taken an established intelligence test. The results of the new test can then be compared to the results of the established test to see how well the two measures correlate. This type of validity can be used to judge the quality of a new measure.

Construct Validity

Construct validity measures how well the findings of using the measure fit the theories around which it is constructed. This term used in the psychological literature to refer to the extent to which a measure accurately reflects the construct it is purporting to measure.

In order for a measure to be said to have construct validity,

✔ It must first be shown to be reliable – that is, it must produce consistent results across repeated measurements.

Once reliability has been established, researchers can then begin to look at whether or not the measure is actually tapping into the construct of interest.

Example

If you were interested in measuring anxiety, you would want to show that your measure is correlated with other measures of anxiety and not with measures of unrelated constructs such as depression.

In order to establish construct validity, researchers need to provide evidence that the constructs they are interested in actually exist. One way to do this is to show that the items on a measure are tapping into a single construct and that this construct is related to other constructs in the way that theory predicts.

Construct Validity is assessed through

- Convergent Validity

- Discriminant Validity

Convergent Validity

When the scores obtained with two separate instruments assessing the same concept are highly associated, convergent validity is established. Convergent validity is the degree to which different measures of the same construct produce similar results. It is important to establish convergent validity when using multiple measures of a construct, such as self-report and performance-based measures, in order to ensure that the results are consistent across measures.

There are a few ways to establish convergent validity, including

- Correlation Analysis

- Factor Analysis.

Correlation Analysis

Correlation is a statistical measure that can be used to assess the strength of the relationship between two variables.

Factor Analysis

Factor analysis is a statistical technique that can be used to identify underlying factors or dimensions in a set of data.

Establishing convergent validity is important for ensuring the reliability and validity of research findings. When measures of a construct are shown to be convergent, it provides confidence that the findings are accurate and trustworthy.

There are several ways to establish convergent validity. In addition to statistical methods, researchers often use theoretical arguments and empirical evidence from previous studies. Establishing convergent validity is important for ensuring that your research measures are valid and reliable.

Let’s say you are interested in studying the relationship between hours of sleep and academic performance. To measure these constructs, you could use self-report surveys. In order to establish convergent validity, you would want to see a strong correlation between the two measures.

Discriminant Validity

Discriminant validity is established when two variables are projected to be uncorrelated based on theory, and the scores obtained by measuring them are empirically confirmed to be so.

It is a statistical concept that is used to determine whether or not two constructs are measuring different things. Discriminant validity is the ability of a test to accurately measure what it is supposed to measure. In order for a test to be considered valid, it must have discriminant validity.

✔ In order for discriminant validity to be present, the two constructs must be correlated with each other, but they must also be significantly different from each other.

✔ If discriminant validity is not present, then it is possible that the two constructs are actually measuring the same thing.

Discriminant validity is important because it allows researchers to be confident that the results of their study are accurate and not due to chance.

Example

A good example of discriminant validity would be if a study was able to show that there are differences between males and females on a measure of aggression. This would demonstrate that the measure used in the study is able to discriminate between the two groups.

Another example of discriminant validity would be if a study was able to show that there are differences between people of different ages on a measure of intelligence. This would demonstrate that the measure used in the study is able to discriminate between different age groups.

Types of Validity Used in Methodologies

| Methodology | Types of Validity used |

| Is there a poor correlation between the measure and a variable that is meant to be unrelated to this variable? | Discriminant validity |

| Does the instrument implement the theoretical concept? | Construct validity |

| Is the concept adequately measured by the measure? | Content validity |

| Do two instruments measuring the concept have a strong correlation | Content validity |

| Do “experts” confirm that the instrument measures what its name implies? | Face validity |

| Does the measure distinguish in a way that aids in the prediction of a criterion variable at the moment? | Concurrent validity |

| Does the measure distinguish persons in a way that aids in the prediction of a future criterion? | Predictive validity |

| Does the measure distinguish in a way that aids in the prediction of a criterion variable? | Criterion-related validity |

Other articles

Please read through some of our other articles with examples and explanations if you’d like to learn more about research methodology.

Citation Styles

- APA Reference Page

- MLA Citations

- Chicago Style Format

- “et al.” in APA, MLA, and Chicago Style

- Footnote Citation

- Do All References in a Reference List Need to Be Cited in Text?

Statistics

- PLS-SEM model

- Principal Components Analysis

- Multivariate Analysis

- Friedman Test

- Chi-Square Test (Χ²)

- T-test

- SPSS

- Effect Size

- Critical Values in Statistics

- Statistical Analysis

- Calculate the Sample Size for Randomized Controlled Trials

- Covariate in Statistics

- Avoid Common Mistakes in Statistics

- Standard Deviation

- Derivatives & Formulas

- Build a PLS-SEM model using AMOS

- Principal Components Analysis using SPSS

- Statistical Tools

- Type I vs Type II error

- Descriptive and Inferential Statistics

- Microsoft Excel and SPSS

- One-tailed and Two-tailed Test

- Parametric and Non-Parametric Test

Comparision

- Independent vs. Dependent Variable

- Research Article and Research Paper

- Proposition and Hypothesis

- Principal Component Analysis and Partial Least Squares

- Academic Research vs Industry Research

- Clinical Research vs Lab Research

- Research Lab and Hospital Lab

- Thesis Statement and Research Question

- Quantitative Researchers vs. Quantitative Traders

- Premise, Hypothesis and Supposition

- Survey Vs Experiment

- Hypothesis and Theory

- Independent vs. Dependent Variable

- APA vs. MLA

- Ghost Authorship vs. Gift Authorship

- Basic and Applied Research

- Cross-Sectional vs Longitudinal Studies

- Survey vs Questionnaire

- Open Ended vs Closed Ended Questions

- Experimental and Non-Experimental Research

- Inductive vs Deductive Approach

- Null and Alternative Hypothesis

- Reliability vs Validity

- Population vs Sample

- Conceptual Framework and Theoretical Framework

- Bibliography and Reference

- Stratified vs Cluster Sampling

- Sampling Error vs Sampling Bias

- Internal Validity vs External Validity

- Full-Scale, Laboratory-Scale and Pilot-Scale Studies

- Plagiarism and Paraphrasing

- Research Methodology Vs. Research Method

- Mediator and Moderator

- Type I vs Type II error

- Descriptive and Inferential Statistics

- Microsoft Excel and SPSS

- Parametric and Non-Parametric Test

Research

- Table of Contents

- Dissertation Topic

- Synopsis

- Thesis Statement

- Research Proposal

- Research Questions

- Research Problem

- Research Gap

- Types of Research Gaps

- Variables

- Operationalization of Variables

- Literature Review

- Research Hypothesis

- Questionnaire

- Abstract

- Validity

- Reliability

- Measurement of Scale

- Sampling Techniques

- Acknowledgements

- Data Coding

- Research Methods

- Quantitative Research

- Qualitative Research

- Case Study Research

- Survey Research

- Conclusive Research

- Descriptive Research

- Cross-Sectional Research

- Theoretical Framework

- Conceptual Framework

- Triangulation

- Grounded Theory

- Quasi-Experimental Design

- Mixed Method

- Correlational Research

- Randomized Controlled Trial

- Stratified Sampling

- Ethnography

- Ghost Authorship

- Secondary Data Collection

- Primary Data Collection

- Ex-Post-Facto

1 Comment

You actually make it seem really easy with your presentation but I find this matter to be actually one thing that I believe I might never understand. It seems too complicated and very vast for me. I am looking ahead to your subsequent publish, I¦ll attempt to get the hold of it!